Evaluation Datasets

Evaluation Datasets are collections of test data used to measure the performance of your AI workflows.

Overview

The Datasets page provides a centralized view of all your evaluation datasets:

Managing Datasets

In the Datasets list, you can:

- View all available evaluation datasets

- See when each dataset was created

- Filter datasets by their active status

- Access detailed information for any dataset

Dataset Details

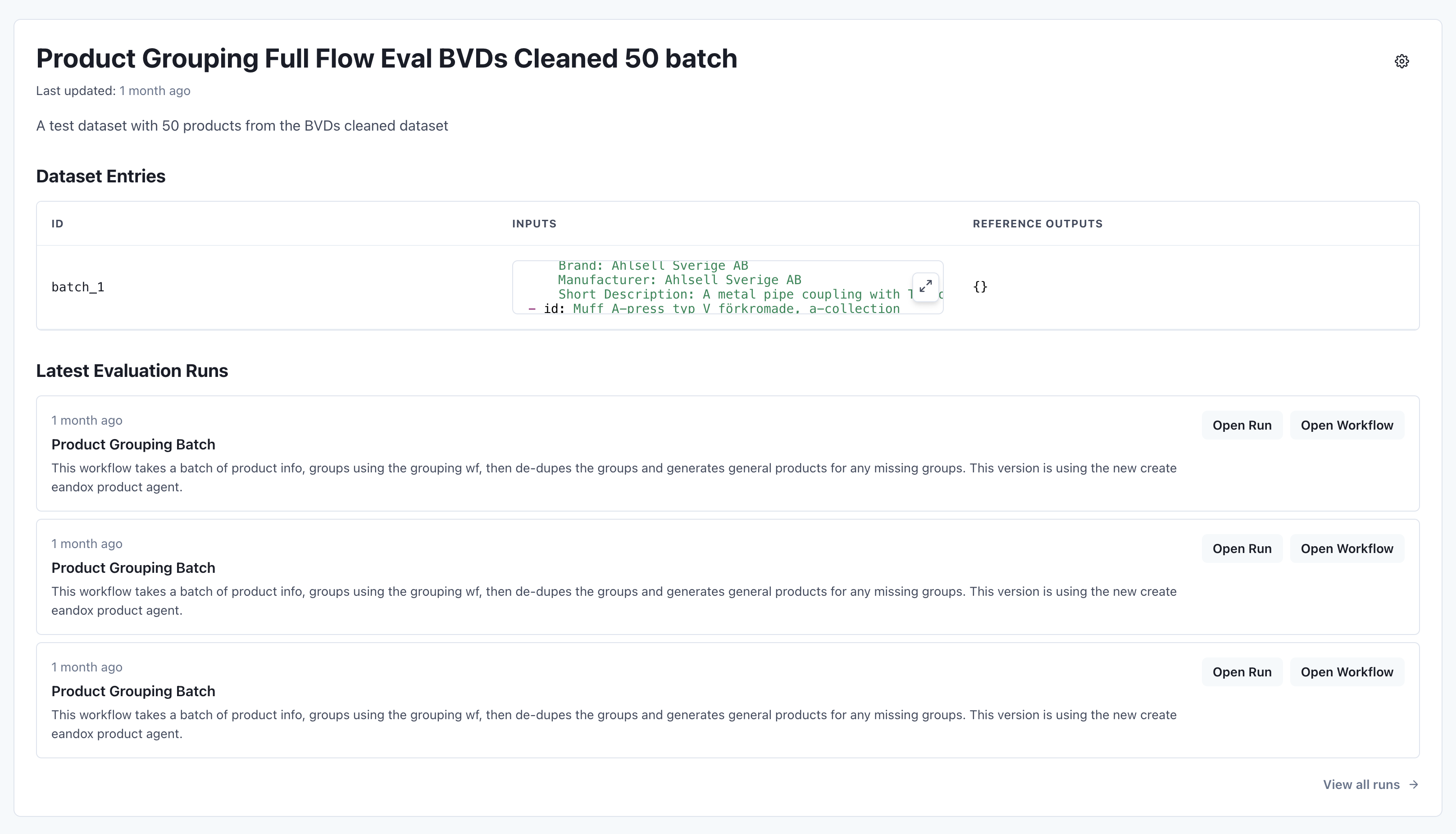

When you select a dataset, you'll see its detailed information:

The Dataset Details page includes:

- Dataset name and description

- Last updated timestamp

- Dataset contents overview

- List of dataset entries with their inputs

- Reference outputs (expected results)

- History of evaluation runs using this dataset

Dataset Entries

Each dataset contains entries that will be used to evaluate your workflows:

- ID: A unique identifier for each entry

- Inputs: The data that will be fed into your workflow (e.g., product descriptions, queries)

- Reference Outputs: The expected outputs to compare against your workflow's results

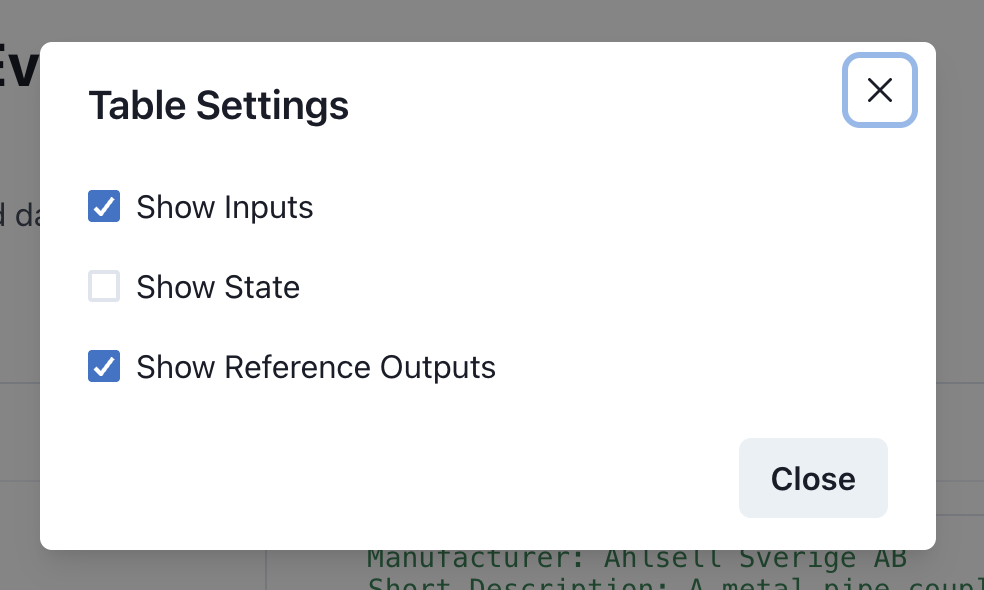

Table Settings

You can customize how dataset entries are displayed using the Table Settings:

Available display options include:

- Show/Hide Inputs: Toggle visibility of input data

- Show/Hide State: Toggle visibility of intermediate state information

- Show/Hide Reference Outputs: Toggle visibility of expected outputs

Latest Evaluation Runs

The Dataset Details page also shows a history of recent evaluation runs performed with this dataset:

- Timestamp of each evaluation run

- Workflow name that was evaluated

- Brief description of the workflow

- Options to open the run details or workflow

Running Evaluations

To run an evaluation against a dataset:

- Navigate to the Dataset Details page

- Select the workflow you want to evaluate

- Configure any evaluation parameters

- Click "Run Evaluation"

Best Practices

For optimal evaluation dataset management:

- Include diverse test cases that cover edge cases

- Maintain reference outputs that reflect desired behavior

- Version datasets when significant changes are made

- Use consistent formatting for inputs and reference outputs

Next Steps

- View Evaluation Runs - Check the results of workflow evaluations

- Return to Dashboard